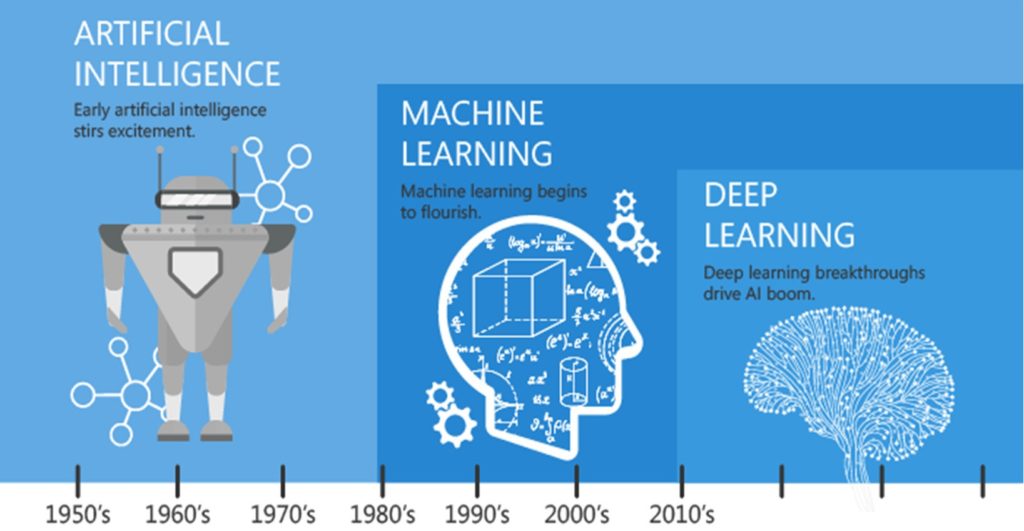

Artificial Intelligence

It’s safe to assume most of us know that AI stands for Artificial intelligence. Due to major blockbuster movies such as “The Terminator” or TV programs like “BattleStar Galactica”, what AI actually entails and how it currently affects our daily lives is less clear. In general, and more specifically to our work here at Gravity Jack, AI is the field of computer science that tries to make programs that are more or less “intelligent” through machine learning.

What many believe is the end goal of AI.

For example, consider all the things that we humans can do: talk, read, plan, drive, etc. When you think about it, humans are quite remarkable creatures in the wide variety of tasks that we can

accomplish, many without any effort whatsoever. You are reading right now without having to spend too much thought on it! We could almost type anything and you would effortlessly continue to read it much like you are doing right now.

However, AI seeks to allow computing machinery to perform these same tasks with human-level or greater performance. In this context, “intelligent” simply means performance at a human level. In fact, one clever computer scientist once defined intelligent behavior as behavior that was indistinguishable from human behavior (insert joke here about whether humans are considered intelligent). What AI does not specify is how we are to achieve this goal, and it’s this very question leads us to machine learning.

Machine Learning In A Nutshell

Machine Learning (ML) is a subfield of AI, it shares the same goals as AI — human-level performance — but specifies a set of techniques. In these techniques, the program or algorithm does not know how to perform the task for which it is developed. Rather, over time, it forms an idea of how to approach the task. In order to make this concept more concrete, we will describe a task: a breast cancer diagnosis. This is a task that is traditionally accomplished via a human expert, a doctor. The doctor receives a sample of suspect tissue (called a biopsy) and then uses their experience to render a diagnosis (malignant or benign).

Imagine writing a computer program that performs breast cancer diagnosis. To make things easier on the programmer, it won’t be necessary for the program to examine raw biopsies. Instead, the program can look at laboratory provided numerical data about biopsies from a file. One approach that the programmer could take is to find an expert. Once this expert is in hand, the programmer would ask the expert to try and explain their evaluation process and then write a program that encapsulates the expert’s knowledge. Such an algorithm could look like this:

- If the average perimeter of the cells is less than .05 units, then output malignant.

- If the average perimeter of the cells is greater than 1 unit, then output benign.

- If the average width of the cells is less than .06 units, then output benign.

- And so on.

This collection of rules is often called “expert system”. Notice that the algorithm contains pretty precise information about how it will accomplish the task of diagnosis. These rules are provided for the program through the code that is written.

Now, what if the programmer didn’t have access to an expert, how would they get the information that is needed? They could become experts themselves! Well, sorta. Imagine that the programmer was provided with a file of 150 biopsies. For each biopsy, the file contains 10 different values describing that biopsy. Each biopsy is further marked as being malignant or benign. This marking of malignant or benign is called the ground truth or the truth for the example.

What the programmer can do is look through the data and see if they are able to spot any patterns. Perhaps some quick sorting of the data would allow the programmer to find one or two rules that they then could encode into an algorithm. The algorithm would look pretty similar to the expert system above, and indeed, is still an expert system. The difference is that the programmer had to discover or learn the rules from previous data before they could code them up.

Wouldn’t it be nice if we could write a program that could look at the same data that the programmer looked at and then have that program output a set of rules on its own? Could we write a program that learns the rules needed for a successful diagnosis? The answer is yes, enter Machine Learning.

Machine Learning for Friends

In most machine learning scenarios, there are two components: the learning algorithm and the model. The model is the algorithm or program that accomplishes the main task. In our cancer diagnosis above, we seek a model to input information about a biopsy and output a diagnosis. The job of the learning algorithm is to find and output a good model.

Of crucial importance to most ML tasks is the dataset, which contains sample inputs and their sample outputs. In our example above, the dataset was the file that contained 150 sample biopsies. Importantly, each sample was also marked to identify if it was malignant or benign. Now, interestingly enough, not all datasets come labeled with sample outputs. Those that do are said to be ready for supervised learning. Think of it as a supervisor or overseer in charge of the data who is responsible for marking the outputs accurately. In unsupervised learning, the dataset is not marked with what the sample output should be because there is no supervisor to indicate the correct behavior.

Thankfully, our breast cancer dataset is marked with the correct diagnosis, so we can easily use supervised learning. A great deal of effort often goes into creating and curating a dataset. Some ML practitioners say that 80% of a machine learning project is tied up in creating the dataset — a number the engineers here at Gravity Jack agree with.

So, our process now is: create a dataset, feed the dataset to a learning algorithm, the learning algorithm outputs a model, and then use the model to perform the task. This process of feeding a dataset to a learning algorithm and outputting a model is called training.

How Good is the Model?

Now, imagine we have trained a model, but before we send it off to do its work (called deployment), it’s important to account for how well it’s performing. This turns out to be quite a big topic, one we will handle it briefly and delicately.

There are several ways we can see how well the model is doing. The first is to take the model and run it on the dataset. From there, we can directly compare our model’s prediction on each biopsy to the sample’s correct diagnosis. Since this is the same dataset that our model was trained on, favorable results are to be expected.

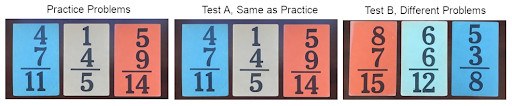

For fun, let us think of our model as a student trying to learn addition. We present the student with example problems to learn, and then follow those examples with a test to track comprehension. If they do well on the test, then can we say with confidence that they will be able to solve any future addition problems? Not necessarily.

What if the student just memorized the answers to their practice problems? When they get their exam, all they need to do is write down the answers they memorized. There is no need for this student to do any addition. The student will still get a good grade on the exam, but what happens when the student is faced with similar, but distinct, math problems in the future? What we really want is for the student to learn a concept that they can apply to future problems. We want the same for the trained models (AKA good model generalization).

What we want is to give our imagined student a test composed of similar, but different math problems than what they practiced on. That way, if a student learns the principles of addition, they will do well on an exam with new problems about addition. In ML this translates to one dataset for training the model, and another for testing the model. These two datasets are called the training set and the testing set. The usual method for creating these two sets is to randomly split the original dataset into the training set and testing set. Usually, the testing set is about 80% of the original dataset and the remaining 20% is the testing set.

So now the process is: create a data set, split it into a training and testing set, feed the testing set to the learning algorithm, the learning algorithm then outputs the model, and then test the model using the testing set.

Some practitioners take this one step further. They worry that the split into training and testing sets may have created a set that contains too many “easy questions” or too many “hard questions.” In order to correct for fortunate or unfortunate splits, they perform the split, train, test portion of the pipeline many times over and aggregate the testing results in a process called cross-validation.

Where Next?

Phew! We know that is a lot of information to take in, but it’s the foundation for all things Machine Learning and a critical step for many of the projects we work on at Gravity Jack. If you’re interested in taking what was discussed here a step further, we recommend spending some time learning a few machine learning algorithms such as Nearest Neighbor and Decision Trees.

If you want to see what all the Deep Learning fuss is about, check out some neural network basics — we recommend starting with the Perceptron then working through Back Propagation and Stochastic Gradient Descent after that. Additionally, there are some tools that allow you to experiment with these concepts, we recommend python’s scikit-learn.

Not to worry, our deep dive into AI and machine learning doesn’t stop here! We are working on many more blog posts that take an even deeper dive into these topics, including network attribution. Stay tuned as we will be posting them in the coming weeks! Have questions about our process and how it works? Feel free to contact us and we would be happy to answer any of your questions.